Make your PHP site fly. Even on small VM instance.

Published: Wed, 13 May 2015 by Rad

Introduction

Step by step instructions how to get maximum from you PHP powered site. You: you have small site running on one of most popular and wildly used web scripting languages - PHP. And all that sweat you put into creating engaging content started to bear fruit. The site is popular, traffic grows exponentially and your little virtual instance gets proper pounding. It all starts to feel a bit sluggish. Your visitors see 502 errors, your monitoring tool bombards you with emails claiming downtime every day. It seems like good idea to upgrade your hosting, but money are still scarce. nginx to the rescue! Learn how to get maximum performance from your small instance detailed in this article.

There are many routes you can take to pinpoint site performance and improve your PHP site performance. Usually main problem lies with database or IO operations, poorly written application, hidden bugs which manifest only under some circumstances (usually higher load), bloated framework or even combination of all . This post is about only one thing: introduction of nginx micro-caching for PHP to your stack. This article is aimed at *mid experienced *developers, will require you to access terminal, change web server settings and have some experience with nginx and php-fpm config files.

Prerequisite

- nginx as main web server, relatively current version (0.8+)

- php-fpm for PHP serving (good performance)

- preferably PHP 5.4+

- tmpfs, shared RAM - to be used for cached files location

Note: This is not about implementing full caching system. For full caching , other solutions like Varnish cache is better. Whole point of micro-caching is to help in smoothing of unexpected traffic spikes. This works well if limited number of your pages (e.g. blog post, article) get exposure on popular networks and they consequently drive traffic to your page.

Also we do not use nginx in front of Apache in this setup. We use nginx as our preferred web server directly serving all pages and using fast cgi to connect to php-fpm.

Setting up whole LEMP stack is beyond the scope of this article, but you can find many resources on Internet to help you with that.

Resource: How to install linux, nginx, MySQL, PHP (lemp) stack on centos 6

What is micro-caching and why to use it? Sounds complicated!

Well the theory is that you cache your files for a short amount of time (like for instance 1 second or even 0.5 second). What this means is when a user requests the page it caches it so the next request for any other will come from cache, and with 200 users requesting within 1 second only 1 in 100 users will have to build up the full page (and with nginx and a good structured site this isn't any problem).

What happens in real life is that only fraction of your pages get traffic spike. What nginx does - it will store generated and optimized PHP code in cache and return cached results during cache lifetime. Once expired (e.g. after 1 second), page is regenerated in PHP and stored in cache storage, preferably RAM for subsequent time period.

Problem: This works well only for GET, HEAD requests. POST requests won't be cached. You need to remember, that any content generated dynamically (counters, timestamps etc) will be frozen during cache lifetime. By default, nginx will skip caching any pages that return Set-Cookie, Expires, or Cache-Control headers.

System which tracks logged users will also experience some difficulties. If you're using cookies to keep track of a user's session, you can add additional rules in micro-cache to skip caching when those cookies are present or you can match URL's path and skip caching for selected paths.

If your site experience traffic spikes due to bots activity (e.g. Googlebot), you may want evaluate user agent headers and change micro-caching values to longer time periods (10s - 100s) for bots, as usually you do not need super fresh data for crawlers.

As mentioned before, for this to work well, you will need to store cached files in fast storage, by default we presume available shared RAM space. You do not need big volume of RAM, few MB will likely suffice.

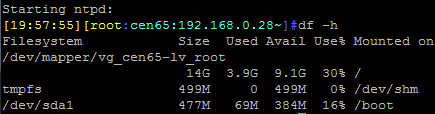

To verify how big is your tmpfs (499MB on my centos installation), issue command bellow:

df -h

This is normal path where cached files are stored - in tmpfs. tmpfs stands for a common name for a temporary file storage facility on many Unix-like operating systems. On most Linux distributions it is mounted under /dev/shm. Detailed explanation what is /dev/shm and how to set it up is in this excellent article: What Is /dev/shm And Its Practical Usage on www.cyberciti.biz. You will need access terminal to change or read values of available space. However, upon Linux installation they are usually included, so most likely they will be available to you straight away.

Resource: What Is /dev/shm And Its Practical Usage

OK, I want it. How do I setup nginx micro-cache

You will need change your nginx config files in two places:

- in nginx.conf

- in between server brackets, location depends on your virtual domain set up; we like to create separate config file under /etc/nginx/conf.d/ folder.

ngixn.conf

Please adjust the size of zone max size depending on your environment. This needs to fit in RAM, or if you using general folder, than it can not be be bigger than RAM + swap on your server instance.

## set cache dir, hierarchy, max key size and total size

fastcgi_cache_path /dev/shm/microcache levels=1:2 keys_zone=microcache:5M max_size=200M inactive=2h;

## set cache log location, so you can evaluate hits

log_format cache '$remote_addr - $remote_user [$time_local] "$request" '

'$status $upstream_cache_status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

## Set a cache_uid vInnoDBble for authenticated users.

map $http_cookie $cache_uid {

default nil;

~SESS[[:alnum:]]+=(?<session_id>[[:alnum:]]+) $session_id;

}

## mapping cache to request method

map $request_method $no_cache {

default 1; # by default do not cache

HEAD 0; # cache HEAD requests

GET 0; # cache GET requests

}

Now we will explain what we did.

fastcgi_cache_path /dev/shm/microcache levels=1:2 keys_zone=microcache:5M max_size=200M inactive=2h;

fastcgi_cache_path /dev/shm/microcache defines path where to store cached files. It can be anywhere, but it makes sense to use shared RAM for absolute performance.

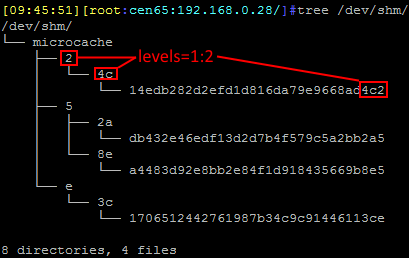

levels=1:2 defines hierarchy levels of a cache. In above configuration file names in cache will look like this:

/dev/shm/microcache/m/29/b7f54b2df7773722d382f4809d65029m

Basically it is one parent folder (1 character in length) and child folder (2 chars in legth) containing hashed file names. File name is using md5 hashing of web site route, or key, which is setup later.

keys_zone=microcache:5M sets the max size of individual file in cache.

max_size=200M sets the max size of whole cache. Note, that maximum size can not be larger than RAM + swap in any case, even if you use standard non shared RAM folder location.

inactive=2h remove from cache if no hit in 2 hours interval. By default inactive is set to 10 minutes.

map $http_cookie $cache_uid {

default nil;

~SESS[[:alnum:]]+=(?<session_id>[[:alnum:]]+) $session_id;

}

If you use sessions for authentication this trick will enable micro-cache for authenticated users. Providing, you haven't changed default name for session vInnoDBble in PHP.

## set cache log location, so you can evaluate hits

log_format cache '$remote_addr - $remote_user [$time_local] "$request" '

'$status $upstream_cache_status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

Note: space between double and single speech marks is important at the end of each line. e.g. "$request" '

The rest of settings mentioned above should be self explanatory. Also note, that micro-cache settings has to precede any inclusion of server {} blocks.

Set up for server {} block

This is placed in virtual domain file under /etc/nginx/conf.d/yourdomain.conf file, inside servr {} block. Because we want to enable micro-caching for php, we place it under php location.

## place under location for php

location ~ /.php$ {

# Setup var defaults

set $no_cache "";

# If non GET/HEAD, don't cache & mark user as uncacheable for 1 second via cookie

if ($request_method !~ ^(GET|HEAD)$) {

set $no_cache "1";

}

# Drop no cache cookie if need be

# (for some reason, add_header fails if included in prior if-block)

if ($no_cache = "1") {

add_header Set-Cookie "_mcnc=1; Max-Age=2; Path=/";

add_header X-Microcachable "0";

}

# Bypass cache if no-cache cookie is set

if ($http_cookie ~* "_mcnc") {

set $no_cache "1";

}

# Bypass cache if flag is set

fastcgi_no_cache $no_cache;

fastcgi_cache_bypass $no_cache;

fastcgi_cache microcache;

fastcgi_cache_key $scheme$host$request_uri$request_method;

fastcgi_cache_valid 200 301 302 10m;

fastcgi_cache_use_stale updating error timeout invalid_header http_500;

fastcgi_pass_header Set-Cookie;

fastcgi_pass_header Cookie;

fastcgi_ignore_headers Cache-Control Expires Set-Cookie;

# ... continue with other php related directives ...

}

All parameters are self explanatory. You will need to restart nginx and php-fpm so changes can take effect. To verify that everything is working you can check if the files are created in micro-cache location.

System configuration

For test to succeed especially if testing against Linux server running multiple threads / cores you will need to change some default setting. The default settings may be designed for client needs, or to save resources, but they set a limit to what performance we can achieve.

ulimit -aH # see what is your current limit

ulimit -HSn 200000 # and change it

and to make it permanent, add following lines to /etc/security/limits.conf.

* soft nofile 200000

* hard nofile 200000

Also change file /etc/sysctl.conf by adding values below:

# "Performance Scalability of a Multi-Core Web Server", Nov 2007

# Bryan Veal and Annie Foong, Intel Corporation, Page 4/10

fs.file-max = 5000000

net.core.netdev_max_backlog = 400000

net.core.optmem_max = 10000000

net.core.rmem_default = 10000000

net.core.rmem_max = 10000000

net.core.somaxconn = 100000

net.core.wmem_default = 10000000

net.core.wmem_max = 10000000

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.ip_local_port_range = 1024 65535

net.ipv4.tcp_congestion_control = bic

net.ipv4.tcp_ecn = 0

net.ipv4.tcp_max_syn_backlog = 12000

net.ipv4.tcp_max_tw_buckets = 2000000

net.ipv4.tcp_mem = 30000000 30000000 30000000

net.ipv4.tcp_rmem = 30000000 30000000 30000000

net.ipv4.tcp_sack = 1

net.ipv4.tcp_syncookies = 0

net.ipv4.tcp_timestamps = 1

net.ipv4.tcp_wmem = 30000000 30000000 30000000

# optionally, avoid TIME_WAIT states on localhost no-HTTP Keep-Alive tests:

# "error: connect() failed: Cannot assign requested address (99)"

# On Linux, the 2MSL time is hardcoded to 60 seconds in /include/net/tcp.h:

# #define TCP_TIMEWAIT_LEN (60*HZ)

# The option below is safe to use:

net.ipv4.tcp_tw_reuse = 1

# The option below lets you reduce TIME_WAITs further

# but this option is for benchmarks, NOT for production (NAT issues)

net.ipv4.tcp_tw_recycle = 1

Than save changes and make system reload the file:

sysctl -p /etc/sysctl.conf

eventually you might want to reboot.

Resource: More about system and benchmark setting HTTP benchmark tools

Performance gain

To demonstrate that these settings really has any benefit we will undergo quick test of simple PHP page. First we will test on system without micro-cache enabled and compare it to test with micro-cache running. We than compare it to little bit more complex page, with OOP, database calls and multiple files inclusions - home page of this site. This is fairly typical PHP app.

Test setup

For test we will use VirtualBox instance on Windows 7 Ultimate desktop machine, running on Intel 2600k processor, 1 share core (thread) per instance and 1GB of RAM, Samsung F1 hard drive used exclusively for Virtualbox. While this is far from ideal environment, and there are performance penalties, it will be enough to demonstrate performance gains. It also tries to simulate digital ocean droplet for $10USD , which also uses 1 virtual cpu and 1GB RAM.

In real life small web sites are run in virtualized environment usually with some sort of fair usage attached, throttling processor cycles and possibly with worse performance penalties than our set up.

Firing tests will be managed by testing suite Weighttp from authors of lighttp web server, which is more advanced load testing suite. Among other things it supports multi-core threading of requests. Installation is relatively easy, there is bundled Python script for Waf. Waf is a Python-based framework for configuring, compiling and installing applications. This will be run on second VB instance with 4 shared cores and 1GB RAM.

If you want to know about advantages of this suite (and disadvantages of others), you can read excellent article about app server load testing written by guys from gwan.com, linked below. Article also describes important Linux system settings for high performance in detail, e.g removing limits on open files, tcp stack tuning and more!

HTTP benchmark tools: Apache's AB, Lighttpd's Weighttp, HTTPerf, and Nginx's "wrk".

Tested PHP app

We will test simple PHP script which will display micro time on each request. This is extremely simple and fast test with only one PHP function. To put it in some perspective we will compare against home page of this site. This is much more complex script in OOP style, with some framework (Fat-free f3) initialization and databse queries caching, but non the less it should bring average requests per second (rps) to real life scenario.

<?php

/**

* Display microtime

* Requires PHP 5, preferably PHP 5.4+

* This function is only available on operating systems that

* support the gettimeofday() system call.

*

* Returns float in format seconds.microseconds

*/

echo ' Current microtime: ' . microtime(true);

For our app testing we will use above mentioned Weighttp testing suite. We will use 7 available cores and vary number of requests from 100k, through 250k to 500k in to various spikes. We will do 3 tests per each requests volume and discard the worst and best value.

We will fire Weighttp from second virtual machine. Target machine runs nginx, php 5.6 with php-fpm, apc opcache. Operating system is CentOS 6.5 x64 bit. Example command - fires 100k requests, with 100 concurrency level and utilizing 7 processor threads.

weighttp -n 100000 -c 100 -t 7 -k "http://192.168.0.28/microtime.php"

Note! Weighttp does not support SSL protocol. For this you might try clone of weighttp, called httpress, which supports SSL via GNUTLS library, is multi-threaded and event driven.

-n number of HTTP requests

-c number of concurrent connections (default: 1)

-k enable HTTP keep-alives (default: none)

-t number of threads of the test (default: 1, use one thread per CPU Core)

Result table 1GB / 1vCore, no micro-caching

| 1GB / 1vCore | |||||

|---|---|---|---|---|---|

| Number of requests | Concurrency users | keep alive | app vs home page | Errors | Bytes total |

| 100,000 | 100 | yes | 3,380 vs 36 rps | 0 vs 0 | 25,685,575 |

| 250,000 | 165 | yes | 3,090 vs 36 rps | 0 vs 0 | 64,213,964 |

| 500,000 | 150 | yes | 3,071 vs 36 rps | 0 vs 0 | 128,426,965 |

rps - requests per second Conclusion: Without micro-caching this relatively light virtual instance can handle concurrency of about 165, and does anywhere between 3,071 to 3,380 requests per second. However, at 200 concurrency we see some of requests to fail (about 8-10%), and while we are not sure why, what is clear that they never reach target. Server still responds with 2xx to all request which reached it.

Result table 2GB / 2vCore, no micro-caching

We then explored option to change resources for virtual server. We changed target virtual server to 2GB and 2vCore instance, and reduced threads to 6 for testing suite.

weighttp -n 100000 -c 100 -t 6 -k "http://192.168.0.28/microtime.php"

| 2GB / 2vCore | |||||

|---|---|---|---|---|---|

| Number of requests | Concurrency users | keep alive | app vs home page | Errors | Bytes total |

| 100,000 | 100 | yes | 5,417 vs 75 rps | 0 | 25,684,101 |

| 250,000 | 165 | yes | 5,449 vs 73 rps | 8 | 64,210,684 |

| 500,000 | 150 | yes | 5,461 vs 76 rps | 0 vs 0 | 128,419,436 |

rps - requests per second

Conclusion: Without micro-caching upgrading your virtual host brings faster average response to requests, concurrency is however still kept stable on around 165, however server is now capable to handle about 5,400 requests per second. At 200 concurrency we see again errors to creep in and loads of 502 service unavailable errors.

Result table 1GB / 1vCore, micro-caching enabled

Now we moved to implement micro-caching on our nginx instance. In columns 3-5 we split value between microtime test and home page of lucasoft. This results in much more data transferred as well, with home page size around 21KB.

Run Weighttp again as follows:

weighttp -n 100000 -c 100 -t 7 -k "http://192.168.0.28/microtime.php"

| 1GB / 1vCore. Microtime script vs lucasoft home page. | |||||

|---|---|---|---|---|---|

| Number of requests | Concurrency users | keep alive | app vs home page | Errors | Bytes total |

| 100,000 | 100 | yes | 4,148 vs 1,720 | 0 vs 0 | 25MB vs 2GB |

| 250,000 | 165 | yes | 5,449 vs 1,773 rps | 0 vs 9 | 63MB vs 5GB |

| 500,000 | 150 | yes | 4,189 vs 1,846 rps | 0 vs 0 | 127MB vs 10GB |

And now the same test for 2GB and 2vCores

Run Weighttp again as follows:

weighttp -n 100000 -c 100 -t 6 -k "http://192.168.0.28/microtime.php"

| 2GB / 2vCore. Microtime script vs lucasoft home page. | |||||

|---|---|---|---|---|---|

| Number of requests | Concurrency users | keep alive | app vs home page | Errors | Bytes total |

| 100,000 | 100 | yes | 5,417 vs 1,766 rps | 0 vs 0 | 25MB vs 2GB |

| 250,000 | 165 | yes | 5,449 vs 1,740 rps | 0 vs 9 | 63MB vs 5GB |

| 500,000 | 150 | yes | 5,461 vs 1,786 rps | 0 vs 0 | 127MB vs 10GB |

Conclusion: Micro-caching enabled definitely improves your virtual host PHP performance and helps to achieve higher throughput. Concurrency seems to be less problem and server is able to handle about 4,189 requests per second. All response codes are 2xx, which is good.

This is of course very simple test with one PHP function. It however still indicates that we can bring performance up by about 36% margin. For more complex pages, where original requests per second (rps) is lower, this could be even better. Some public tests suggest default WordPress application can benefit up to ~10x improvement in rps values.

If you will test it on live hosting there are many factors to consider, which will give you much lower rps per size of your instance, e.g.:

- network performance between target and firing machine

- security policies of your host

- fair usage policies (note that cpu usage on target will shoot to 100% during test) and resource throttling

- ddos protection of your host (if available)

Conclusion

Enabling nginx micro-caching for average PHP app will bring significant performance boost. It is very easy to implement and it should not take more than 10 minutes.

It cost you nothing and depending on complexity of your app it can boost your app to serve ~10x more requests per second than previously. Obviously, this approach is not suitable for all apps, but works very well for GET and HEAD, where real time processing or fetching data from external sources on each request is not involved.

Please sign up for our newsletter to receive notifications when we publish similar articles aimed at small web sites performance, tips and tricks and more.

nginx, micro-caching - from around the web

- 40 Million hits a day on WordPress using a $10 VPS - $10/Month 1GB Ram Digital Ocean VPS running Ubuntu

- nginx home page - news and latest releases of popular and good performance web server.

- Why You Should Always Use Nginx With Microcaching - short article about micro-caching.

- How to install linux, nginx, MySQL, PHP (lemp) stack on centos 6 - quality tutorial from digitalocean.

- HTTP benchmark tools: Apache's AB, Lighttpd's Weighttp, HTTPerf, and Nginx's "wrk" - web server testing explained by authors of gwan, C powered app server.

Back to : Blog articles list